Responding When CSOs & NGOs Are Targeted

Disinformation Toolkit 2.0

Developing strategies to combat disinformation is an evolving area of practice.

Developing and deploying strategies for anticipating and responding to disinformation is an evolving area of practice. Organizations must support staff in developing thoughtful, dynamic methods to prepare and respond. To do so requires organizations to move from ad hoc responses to more streamlined, systematic workflows and processes, which vary depending on the particular risks that organizations, staff, and programs face. It should be noted that if your organization is operating in a high-risk context, this document is meant to provide general guidance but should not be considered a detailed guide. Additional analysis would need to be done to mitigate safety risks.

Staff Roles

In thinking about how disinformation does or may affect your organization and its work, engaging relevant teams across your organization is crucial. Technical, program, communications, and security staff will each have an important role in your organization’s response to disinformation.

For Technical Staff and Program Designers:

At the program design phase, depending on the program’s focus and potential for political exposure, consider researching potential historical disinformation narratives or cultural flashpoints related to your work. Additionally, communications professionals should think about building in rumor tracking and response methods into communications plans, particularly for programs with high public visibility.

For Program Managers and Staff:

When managing a program, liaise with project communications staff and your headquarters communications team to build in periodic reviews of social media chatter related to your organization, project, and the focus of your work in-country. When rumors and gossip appear, be ready to liaise with your organization’s global communications and security teams to add to your project’s technical expertise and re-deploy budgeted resources as necessary.

For Communications Staff:

Discuss your organization’s disinformation-related risks to identify potential weak spots and opportunities for proactively preparing for a possible attack, including pre-bunking or anticipating narratives and pre-seeding messaging on social media and through partners. Conduct a media threat assessment as part of larger risk assessments (see the Risk Assessment Tool at the end of this section) and seek to answer the following questions:

- Has your organization suffered from a disinformation event before? How did you respond, and what procedures are in place guiding such a response?

- If so, was the organization able to determine who was behind it and why?

- What steps did the organization take before the disinformation event? Are these defensive steps still valid or sufficient? Why or why not?

- What steps were taken after the attack to inoculate the targeted projects, individuals, or local partners from future attacks? Have these steps been formalized in policy for other projects or individuals which may be at high risk of disinformation attacks?

Train team members on how to identify disinformation:

- Are you aware of what early warning signals might be? For example, are you aware of what a bot might look like? Do you know how to detect a false domain?

- Consider whether it is better for your organization to respond in its own voice, or to work with mission-aligned local partners and thought leaders to respond through authentic local voices.

For Security Staff:

Disparaging attacks against organizations and leaders, even if false, have, in the past, posed physical threats to local offices and individuals. Therefore, online disinformation should be an issue security leads are briefed on as they develop risk mitigation, emergency crisis response plans, and seek to answer the following questions:

- Who might gain by undermining your organization’s credibility?

- What tools do they have at their disposal (e.g., access to state media)?

- What is the appropriate response, if any at all?

Security staff might also conduct a digital security assessment and provide recommendations on secure data collection and communication, particularly in country contexts where there is high risk of surveillance.

Lastly, determine if there is an active community of practice or network among NGO or CSO security staff to share information about mis- and disinformation threats, targets, risks, and to strategize in the event a coordinated response is warranted. Collective security management has proven to be helpful in such circumstances, and domestic security networks should be linked up to global counterparts as well.

Assessing Digital Security Risks

The Global Interagency Security Forum provides guidance on digital security for non-experts in humanitarian contexts. This guide is available in English, Spanish, and French. Learn more HERE .

For Legal and Policy Advisors:

Legal and policy advisers associated with international civil society organizations and NGOs should consider the following steps when their organizations are targeted by disinformation.

- Which legal and regulatory frameworks are applicable in the relevant country context? It’s important to understand how local laws and policies may relate to free speech, mis- and disinformation, and what qualifies as an infringement of rights.

- What are the political dynamics of the problem? What is the likely source? What is the likely intent? How might this impact the response strategy?

- Stakeholder and power analysis/mapping and strategy development for action- to include domestic, regional, and global actors as relevant (working with Comms, programs, security, etc.).

Developing a Risk Mitigation Plan

This section summarizes steps you might consider taking to develop a strategy for identifying and responding to online disinformation that could affect your organization’s operations and the safety of your staff. Think about your strategy in four parts, which are detailed below:

- Evaluate your media and information ecosystem to determine where your disinformation risk is greatest.

- Determine who is spreading the false information about your organization, leaders, or programs and develop a hypothesis about why they are sharing this information.

- Determine what they are spreading or saying and how it is spreading.

- Determine whether and how to counter this information and work with your organization’s leaders to design workflows within your organization.

- Confer with like-minded NGOs or similar stakeholders and develop a collective understanding and response plan to disinformation attacks.

The following are approaches to taking these actions. These suggestions should be viewed as conversation starters for you and your staff that will require additional institutionalization, based on your organization’s work and structure. The steps that you decide to take should be tailored to the unique context in which your organization operates.

1. YOUR MEDIA ECOSYSTEM

Understand all forms of media in which disinformation is spread—print, websites, and social media. One of the biggest ecosystems to analyze is the online media environment in which your programs operate. One of the first questions to ask yourself is: How vulnerable is my media environment to abuse? Consult your national staff and learn how information flows within the communities that matter most to your organization. If context-appropriate, conduct a rapid, anonymous survey among beneficiaries to determine access to TV/radio/newspaper; access to technology including mobile phones and mobile data; adoption of messaging apps; social media; and community information hubs or influential people in your community of focus. Keep in mind that collecting or storing data about beneficiaries or affected populations digitally can be dangerous, especially as it relates to mis- and disinformation in contexts where authorities who are surveilling civil society are also the perpetrators of disinformation.

Possible discussion questions for your project or program communications staff could include:

Questions about your audience:

- How do people get information about news, politics, and their community? How does the answer change with gender, age, economic status, location, and other key demographic factors?

- What are the sources of information most important for political news (e.g., people, institutions, technology tools)?

- How does the nature of these sources affect their spread and influence in your community (e.g., information in newspapers travels much slower than on Facebook)

- What information sources seem to matter to your core audiences?

Questions about your threats:

- Who are the distributors (i.e., who shares the posts that go viral) that affect your work or your organization? Are there specific Facebook or messaging groups that are particularly present?

- Who are likely creators (i.e., who develops the content that goes viral) of false claims that affect your work or your organization? This refers both to individuals and organizations that may be propagating such claims.

- Do you have any hypotheses on how they disseminate their information and messages?

- What are their motivations?

2. WHO CREATES DISINFORMATION? WHY?

Disinformation researchers cite two primary actors that create and disseminate disinformation content:

- State or state-aligned groups and political actors with political goals. In the Philippines, the president’s office has built a propaganda machine, in the form of fake accounts and bot networks, that disparages organizations and journalists and disseminates narratives with specific political goals.

- Non-state actors, such as terrorist organizations, extremist groups, political parties, and corporate actors. During the migrant crisis in the Mediterranean, anti-immigration news outlets published a number of false stories claiming that a large international NGO was working with human traffickers as part of their search and rescue program. While false, the NGO was forced to divert valuable resources to fight these accusations. These groups have political aims to recruit supporters, create confusion, or disparage groups who oppose them.

Be careful to distinguish groups with politically motivated goals from individuals and groups motivated by economic incentives that create and disseminate false information. These are actors who have identified methods to earn a living by creating and disseminating false information; they may support state and non-state actors in achieving their political goals. In the United States, reports of Macedonian teenagers building false information content farms showed how these cottage industries generate revenue and created an industry around the creation and dissemination of false information. On the political side, propagators aim to sow confusion or discontent among targeted communities. In Myanmar, for example, Facebook has been repeatedly jammed after major terrorist attacks with doctored photos and false information about the attacks from outside sources.

3. WHAT ARE THEY SAYING? WHERE IS IT APPEARING?

Disinformation is disseminated through the Internet through websites; social media platforms, such as Facebook and Twitter; and messaging applications such as WhatsApp, Facebook Messenger, Telegram, LINE, Viber, and Instagram. However, the medium will vary depending on how actors are seeking to reach their intended audiences. Commonly cited areas where disinformation has appeared include the following:

- Websites

- Facebook pages

- Messages through Facebook Messenger, WhatsApp, Viber, Telegram, LINE, Instagram, and others

- Posts in public or private Facebook groups

- Comments on highly visible news pages

- Traditional newspapers, radio, and television

Organizations may consider developing a system to record and log problematic posts, photos, or text content in a spreadsheet as they occur and share these materials with other groups who are experiencing attacks or observing worrisome trends. By aggregating and collecting this information, research partners may be able to support research that identifies sources and networks leading to the spread of disinformation.

Rumor Tracking How-to Guides

The USAID Breakthrough Action program has developed two brief guides to help project teams develop rumor tracking systems:

More details on each are provided in the Misinformation, Global Health and COVID-19 section.

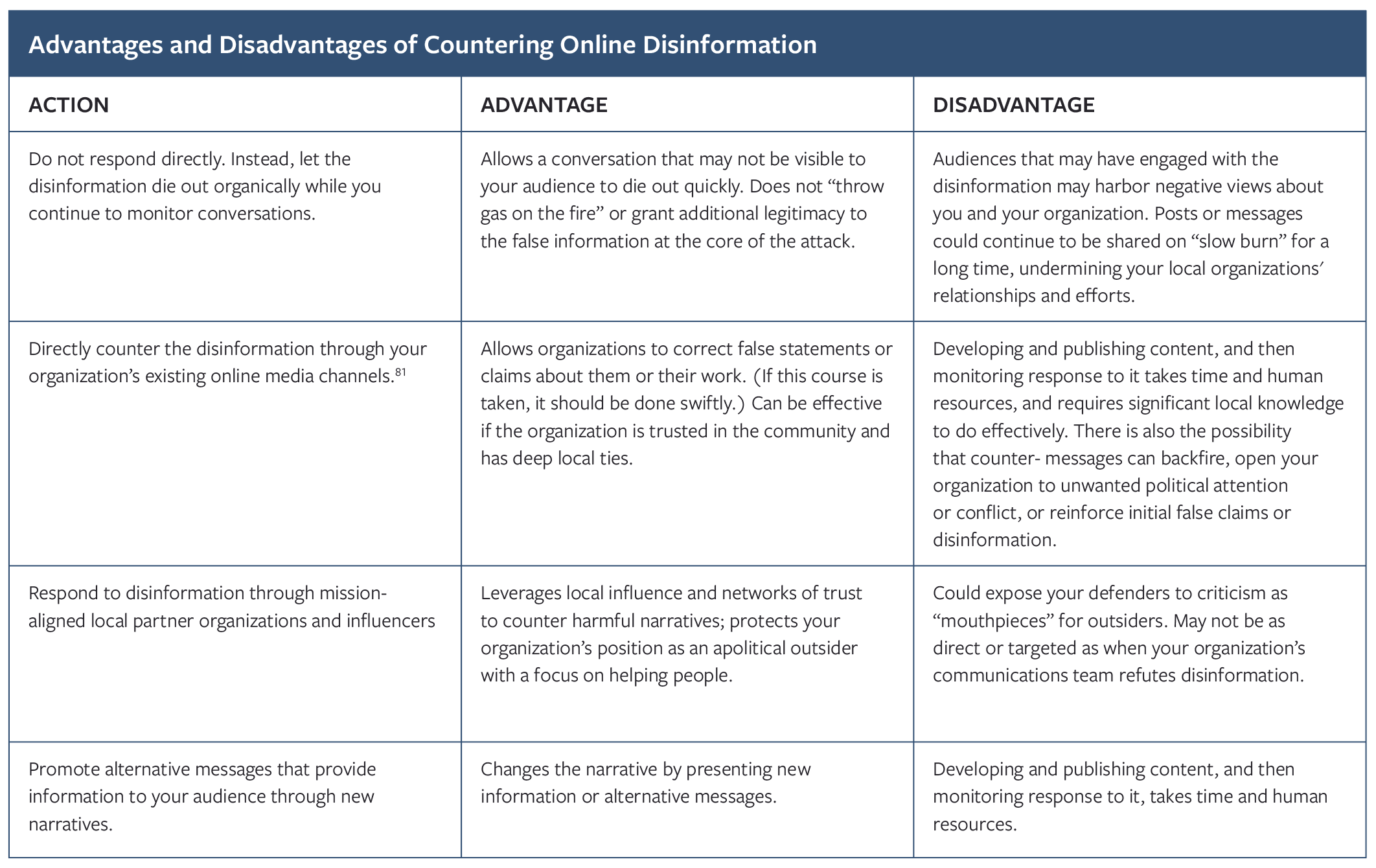

4. DECIDE WHETHER AND HOW TO TAKE FURTHER ACTION

Have discussions with your communications and security teams to determine whether actions need to be taken to counter disinformation, such as responding via your organization’s existing online media channels. Depending on the circumstances and your organization’s goals, the following options may be appropriate for your response to disinformation attacks:

5. CONFER WITH LIKE-MINDED NGOS AND ORGANIZATIONS

Identify and coordinate with partners who share the same vulnerabilities. Consider joining the country-level NGO forum or relevant country-focused InterAction Working Group if your organization is not already a Member. There is significant

value in identifying and working with like-minded organizations to discuss vulnerabilities and attacks when they occur.

While disinformation attacks often target individuals and institutions, they are often more broadly targeted at civil society organizations, national NGOs, or international NGOs in humanitarian settings. Given these shared interests, it is typically more effective and safer to join forces, pool resources, undertake collective analysis, joint strategy development, and action planning. It also helps to speak collectively to U.N. agencies and other international organizations who may have access to more resources as part of a collective response strategy.

As an example of collective response, InterAction’s Together Project has developed a space for Muslim-interest foundations in the U.S. to find allies who can carry important messages to different constituencies, including larger interfaith coalitions. These relationships have allowed the alliance to strategically deploy surrogates to promote positive messages at the local level (whether it is commemorating a holiday, supporting disaster response, or sharing content around significant political events) and to members of Congress when advocating for specific issues. Working together as a network and addressing the problem together has been an essential part of sharing insights and brainstorming solutions.

If your organization has experienced a disinformation attack with international media coverage, you may also consider the following actions:

- Archive social media content. If this is an area of increased vulnerability for you, consider connecting with open source investigation labs or media organizations that focus on archiving social media information (see recommendations in the Databases of Disinformation Partners, Initiatives and Tools section of this document).

- Discuss the event with partners and donors to ensure you can “pre-bunk” the narrative before they hear it from other sources. Examine what happened to you and your colleagues with critical stakeholders, including your partners and donors.

- Contact vendors. Disinformation is also an urgent issue for technology platforms to address. If there were any issues related to engagement with the platforms directly in requesting removal of content, tell your organization’s policy contact.

- Conduct a formal, after-event assessment. Discuss how you would have handled the event differently or resources that you wish you would have had. Examine and assess the experience and work across your organization to establish protocols to prepare you and others in your organization for the next event.

Engaging & Enabling Local Staff

It is important for local staff teams to be involved in threat assessment and response activities related to disinformation. Local staff’s knowledge of local language(s), history, politics, and culture position them to more quickly and easily identify problematic trends and narratives as they occur. Additionally, access to a broader range of communications channels, including through both traditional and digital media, as well as through contacts such as friends and family, gives local people valuable perspectives on what an appropriate and proportionate response might be. Ensure that your project or program communication teams are led or staffed by local people. Discuss and identify standard operating procedures for team members to share patterns and behaviors.

Local staff may be more likely to identify problematic trends and narratives as they occur and have valuable perspectives on an appropriate response.

- Develop an internal system for documenting and reporting instances of disinformation online that may affect an organization’s operations (see Rumor Tracking How-To Guides above or Internews’ guide to Managing Misinformation in a Humanitarian Context below).

- Discuss the issue with staff, and designate a preferred method of communication around the problem, to highlight the importance of sharing events internally when they occur. Doing so allows organizational leaders to get a more accurate picture of threats against the organization in real-time.

Managing Misinformation in a Humanitarian Context

This document from Internews is a detailed technical guide to understanding audience preferences, rumor tracking, and responding for organizations working with affected populations in humanitarian contexts. Learn more HERE.

Longer-Term Strategies: Building Community Resilience

Proactive measures to establish relationships, build trust, and promote information about what organizations are doing and who they are, help to make a strong defense against false claims. Inversely, groups with weak relationships that infrequently share information with their communities will be more susceptible to disinformation attacks. Practitioners know this work is essential, but it is not a priority when working under stress or in crisis environments where immediate relief or protection are needed. Below are suggestions to get started quickly and take steps toward preparing your organization to be ready if and when an unexpected disinformation event occurs.

- If resources permit, have your project communications team proactively develop relationships with credible information sources. Based on the media ecosystem assessment suggested above, build relationships with a network of trusted journalists. Organize one-on-one meetings to brief them on your work, regularly invite them to your events and activities if appropriate, and maintain a drumbeat of information to these journalists.

- Develop a plan for proactively communicating who you are and what you do locally. Working on sensitive issues means there is often a tension between needing to be discreet and needing to be more vocal to correct inaccurate information or promote accurate details. Encouraging the spread of your messages can help you shape your narratives and help others reject information that may be inconsistent with their beliefs about your organization. If you do not proactively share what your organization does and what you stand for—or work with local partners to do so—then someone else may fill information gaps with inaccurate information.

- International NGOs and civil society organizations often feel uncomfortable proactively advocating for their work. At times, this is due to operational concerns about sensitive work, but other times it is due to a desire to spend resources on efforts perceived more directly related to the work itself. Organizations often can do more to promote who they are and the work they do and proactively share these messages with their partners and stakeholders. Discuss with your colleagues your approach to balancing proactive communications about your activities and events, with the potential risk of that information negatively affecting communities you support.

In Practice

CSOs and NGOs should work to anticipate risk and share resources before the crisis. NGOs have noted the benefit of developing systems for translating stock messages to be used in crisis situations. Translators Without Borders, through a proactive communications “words of relief” program, translates critical messages before crises. The organization developed a library of statements on topics such as flood warnings to build up resilience when attacks or disasters occur so people are more informed. This was deployed with success through the Red Cross and the International Federation

of Red Cross and Red Crescent Societies (IFRC) during the 2017 hurricane season in the Caribbean. Messages were translated into Creole and Spanish in late September and October of 2017. Translators Without Borders emphasized the need to provide the right content that is relevant and in a format that is accessible.

While this toolkit focuses primarily on online disinformation campaigns, some audiences may have other mechanisms to receive and share information (which may be through traditional media due to lack of access to technology, connection, or trust in those sources). Effective responses to those campaigns need to appreciate the information landscape in that particular context.

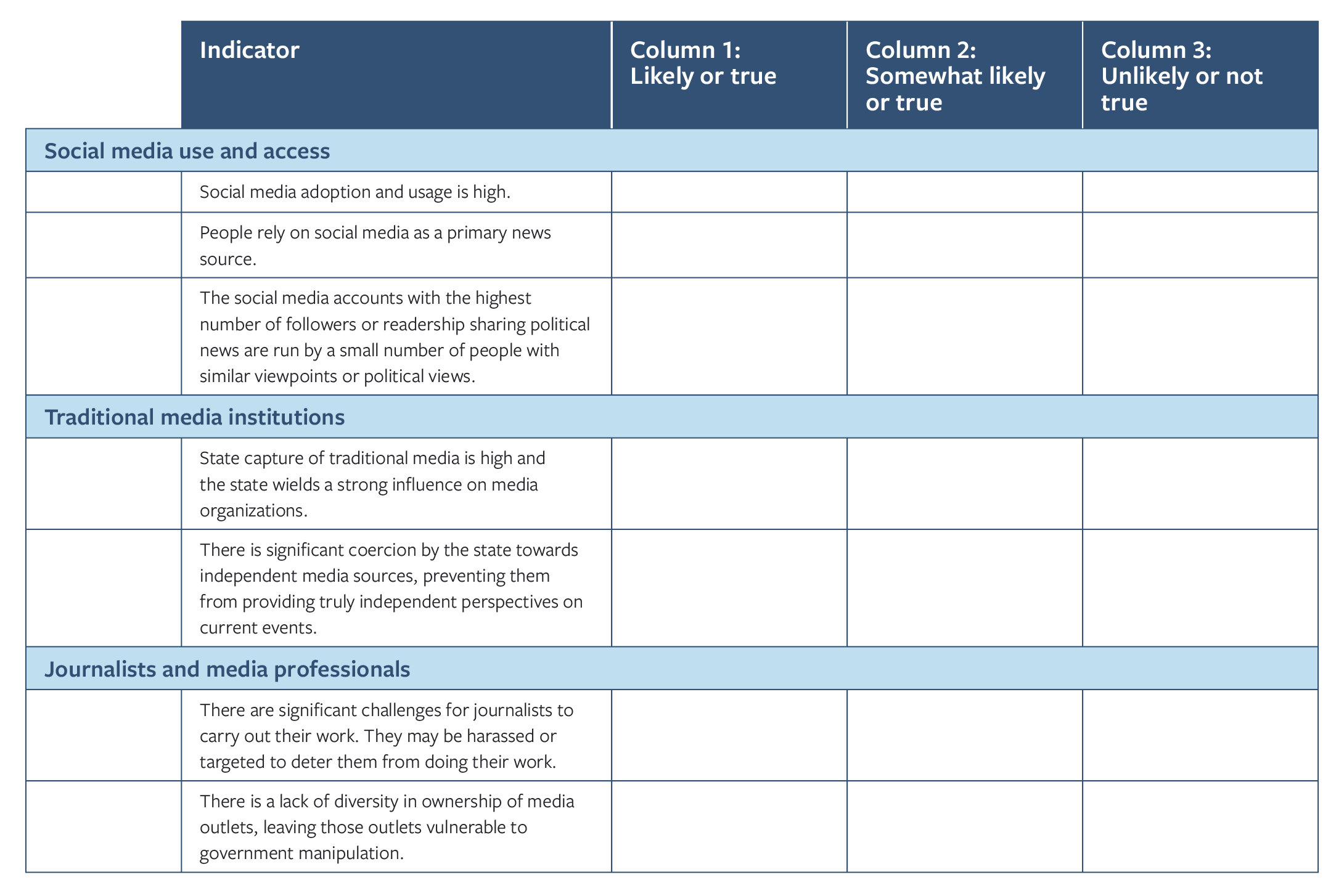

Risk Assessment Tool: Assessing the Vulnerability of Your Media Environment

Specific factors make media more prone to abuse in areas undergoing a major transition or conflict. Assessing the presence of these factors can help you and your colleagues determine how vulnerable media might be to abuse by state and non-state actors.

How to use this tool:

On the worksheet, mark a tally under “likely,” “somewhat likely,” or “unlikely” under each indicator. Add up the tallies for the column at the bottom of the spreadsheet. Once you’ve evaluated your risks, take your total in Column 1, multiply it by two, and add it to the sum from Column 2. Then read the description below that corresponds with your score.

12-22: High Vulnerability

Prioritize developing a disinformation response plan with your in-country colleagues. Continue to monitor threats and update your plan as needed.

6-11: Medium Vulnerability

Discuss a disinformation response plan with your in-country colleagues as a team. Continue to monitor threats and update your plan as needed.

1-5: Low Vulnerability

Ask your communication and security staff to develop a response plan.